News

Anca Around the World – Conferences from the Past Year

Anca Dragan has been busy the past year, attending conferences and delivering talks everywhere from Berkeley to Switzerland to promote the safe development of AI and robotics. In the past year, she has attended a variety of events, including:

CHAI Students at Workshop on AI Alignment

26 Aug 2018

This weekend workshop brought together research interns from MIRI and UC Berkeley’s Center for Human-Compatible AI (CHAI) for the second workshop on approaches to AI alignments to discuss conceptual foundations and open problems in AI safety research.

Why is Facebook Keen on Robots? It’s Just the Future of AI

17 Jul 2018

CHAI faculty members Pieter Abbeel and Bart Selman give their thoughts on Facebook and robots. Read the article here.

Stuart Russell and Anca Dragan Publish Paper on “An Efficient, Generalized Bellman Update For Cooperative Inverse Reinforcement Learning”

16 Jul 2018

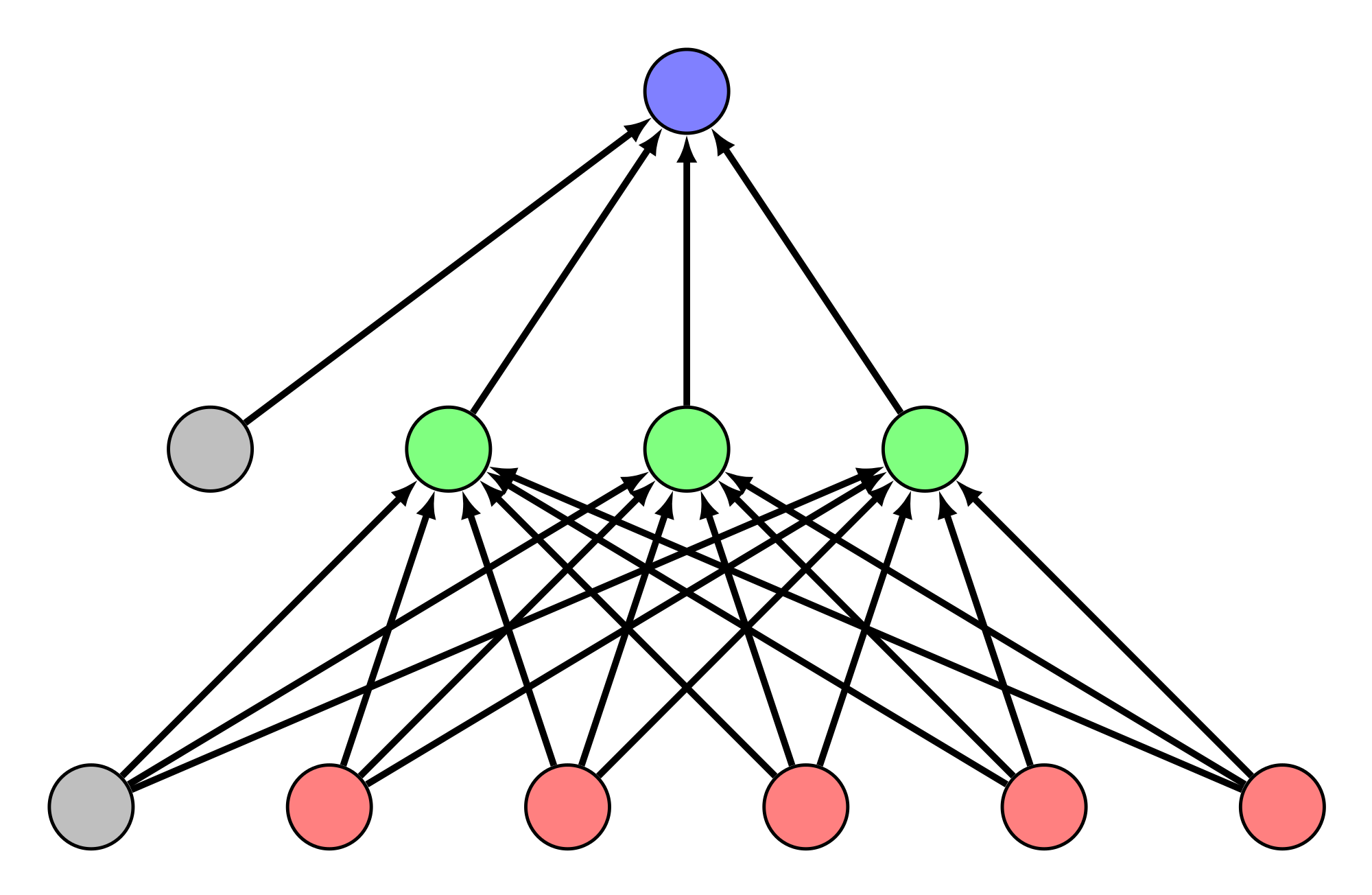

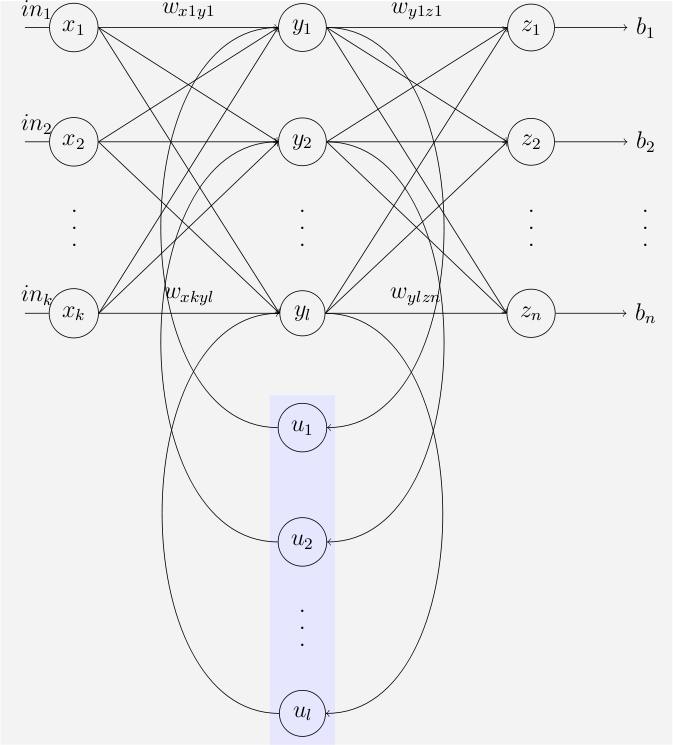

CHAI PI Stuart Russell and co-PI Anca Dragan, with a number of other authors from Berkeley’s School of Electrical Engineering and Computer Science, published “An Efficient, Generalized Bellman Update For Cooperative Inverse Reinforcement Learning” in the Proceedings of the 35th International Conference on Machine Learning in Stockholm, Sweden back in July 2018. The paper’s abstract states that:

Three CHAI Researchers Present at the GoalsRL Workshop

14 Jul 2018

Adam Gleave and Rohin Shah attended the 2018 GoalsRL Workshop and presented the paper Active Inverse Reward Design and Adam additionally presented a paper on Multi-task Maximum Entropy Inverse Reinforcement Learning. Also, Daniel Filan presented the paper Exploring Hierarchy-Aware Inverse Reinforcement Learning.

CHAI’s Adam Gleave and Rohin Shah Present “Active Inverse Reward Design”

Two of CHAI’s researchers presented this paper at the 1st Workshop on Goal Specifications for Reinforcement Learning, FAIM 2018. The abstract reads: