News

Anca Dragan Hosts Workshop on Machine Learning for Intelligent Transportation at NeurIPS 2018

15 Jan 2019

Over 150 experts from all areas attended CHAI PI Anca Dragan’s workshop to discuss the challenges involved with machine learning and transportation, such as coordination between both between vehicles and between vehicles and humans, object tracking, and more.

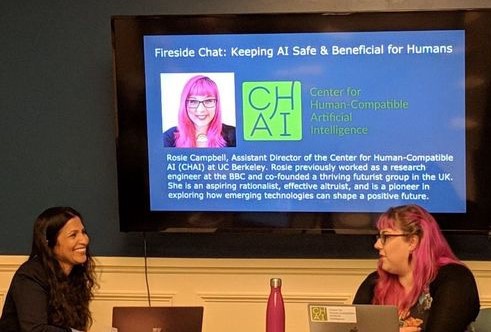

Rosie Campbell Speaks About AI Safety and Neural Networks at San Francisco and East Bay AI Meetups

08 Jan 2019

CHAI Assistant Director Rosie Campbell gave a talk at the San Francisco AI Meetup to explain in basic terms what a neuron is, how they combine to form a network, why convolutional neural networks have had so much attention, and the challenges and opportunities of AI progress. See the slides here and a write-up of an earlier version of the talk here. Rosie also sat down for a chat with tech blogger Mia Dand on “Keeping AI Safe and Beneficial for Humanity”. Their discussion covered topics such as CHAI’s mission and approach, the problem of AI alignment, and why you might or might not believe AI safety research is important.

Congratulations to Our 2017/2018 Intern Cohort!

01 Jan 2019

“Interning at CHAI has been one of those rare experiences that makes you question beliefs you didn’t realize were there to be questioned… I’m coming away with a very different view on what kind of research is valuable and where the field of safety research currently stands” – Matthew Rahtz

Congradulations to our 2017/2018 Intern Cohorts

“Interning at CHAI has been one of those rare experiences that makes you question beliefs you didn’t realize were there to be questioned… I’m coming away with a very different view on what kind of research is valuable and where the field of safety research currently stands” – Matthew Rahtz

CHAI Influences Vox Article

21 Dec 2018

Stuart Russell and Rosie Campbell were quoted in the piece “The Case for Taking AI Seriously as a Threat to Humanity”. It was featured in Vox’s Future Perfect section, which focuses on covering high impact causes such as climate change, AI safety, and animal welfare. The article covers everything from the basics of what AI is to the arguments for why AI could pose a danger to humanity.

Rohin Shah Featured on AI Alignment Podcast

17 Dec 2018

CHAI grad student Rohin Shah was featured on the Future of Humanity Institute’s AI Alignment Podcast where he talked about Inverse Reinforcement Learning and the futuer of value alignment. The podcast can be accessed here.

Actual Causality – a Survey by Joe Halpern

16 Dec 2018

Joe Halpern gave a talk at the Societal Concerns in Algorithms and Data Analysis conference, over mathematical models of causality. The slides can be found here

Stuart Russell Featured in MIT Interview

09 Dec 2018

Stuart Russell was interviewed by Lex Fridman, a research scientist at MIT who focuses on human-centered AI. The long-form interview goes into great detail on general intelligence, progress in AI, current and future AI risks, and much more.

CHAI Attends NeurIPS Conference

08 Dec 2018

CHAI graduate students Rohin Shah, Adam Gleave, Daniel Filan, and Dylan Hadfield-Menell, along with interns Matthew Rahtz and Aaron Tucker, attended the NeurIPS 2018 Conference, where they presented papers, met with researchers, and learned about work in the rest of the field.