News

Mark Nitzberg Publishes WIRED Article Advocating for an FDA for Algorithms

15 Aug 2019

CHAI’s Executive Director Mark Nitzberg, along with Olaf Groth, published an article in WIRED Magazine that advocates for the creation of an “FDA for algorithms.”

Rohin Publishes “Learning Biases and Rewards Simultaneously”

05 Jul 2019

Rohin Shah published a short summary of the CHAI paper “On the Feasibility of Learning, Rather than Assuming, Human Biases for Reward Inference”, along with some discussion of its implications on the Alignment Forum.

CHAI Releases Imitation Learning Library

Steven Wang, Adam Gleave, and Sam Toyer put together an extensible and benchmarked implementation of imitation learning algorithms commonly used at CHAI (Notably GAIL and AIRL) for public use. You can visit the Github here.

CHAI Paper Featured in New Scientist Article

01 Jul 2019

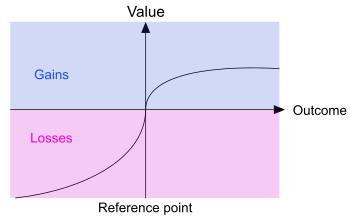

A recent New Scientist article features a paper that Tom Griffiths and Stuart Russell wrote along with David D. Bourgin, Joshua C. Peterson, and Daniel Reichman. The article discusses how the researchers were able to make a machine learning model that took into account human biases, like risk adversion, that are usually hard for computer systems to model.

CHAI Presents Paper on Adversarial Learning at ICML

14 Jun 2019

CHAI researchers Michael Dennis, Adam Gleave, Cody Wild, Neel Kant, and Stuart Russell, along with Sergey Levine, gave a talk on their paper Adversarial Policies: Attacking Deep Reinforcement Learning at the International Conference on Machine Learning 2019. There is a video of the talk on the ICML Github (starts at 1h:35m) and the slides can be here

Michael Littman Gives Talk on Reward Design for Cooperation at CHAI

12 Jun 2019

Michael Littman, a professor at Brown University, recently gave a talk at CHAI on Reward Design for Cooperation. Professor Littman also runs the Humanity-Centered Robotics Initiative with Bertram Malle and Peter Haas.

Founders Pledge Recommends Giving to CHAI

Founders Pledge, a non-profit organisation where entrepreneurs make a commitment to give a percentage of their proceeds when they sell their business, has recommend CHAI as an impactful donation opportunity.

In their article, they discuss existential risk as a cause area to donate to, including the risks of misaligned artificial intelligence. The article can be found here.

CHAI Faculty Paper Accepted to ICML

07 Jun 2019

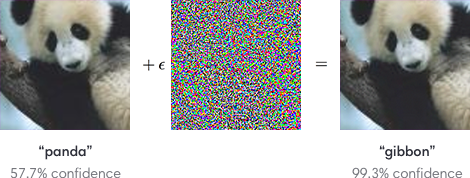

David Bourgin, Joshua Peterson, Daniel Reichman, Thomas Griffiths, and Stuart Russell submitted the paper Cognitive Model Priors for Predicting Human Decisions to the International Conference on Machine Learning 2019. The abstract can be found below:

Vincent Corruble Joins CHAI as Visiting Researcher

30 May 2019

We would like to give a warm welcome to Vincent Corruble as our newest visiting researcher! Vincent is a professor at Sorbonne University and a researcher at the Laboratoire d’Informatique de Paris 6, one of the largest computer science labs in France. He will be here at CHAI continuing his research on characterising and mitigating the risks of a comprehensive AI that anticipates, satisfies, preempts all human needs and desires, and simulating the emergence of ethical values in a society of learning agents. He will be doing his research with CHAI for the next three months.