News

Hertz Foundation Awards Fellowship to Alyssa Dayan

15 Jun 2020

Alyssa Dayan, an incoming PhD student advised by Stuart Russell, was awarded a fellowship from The Hertz Foundation.

Andrew Critch and David Krueger publish “AI Research Considerations for Human Existential Safety (ARCHES)”

30 May 2020

Andrew Critch, CHAI’s Research Scientist, and David Krueger have published “AI Research Considerations for Human Existential Safety (ARCHES).”

Vael Gates and Professors Anca Dragan and Tom Griffiths Publish “How to Be Helpful to Multiple People at Once”

05 May 2020

PhD student Vael Gates and Professors Anca Dragan and Tom Griffiths published “How to Be Helpful to Multiple People at Once” in the journal Cognitive Science.

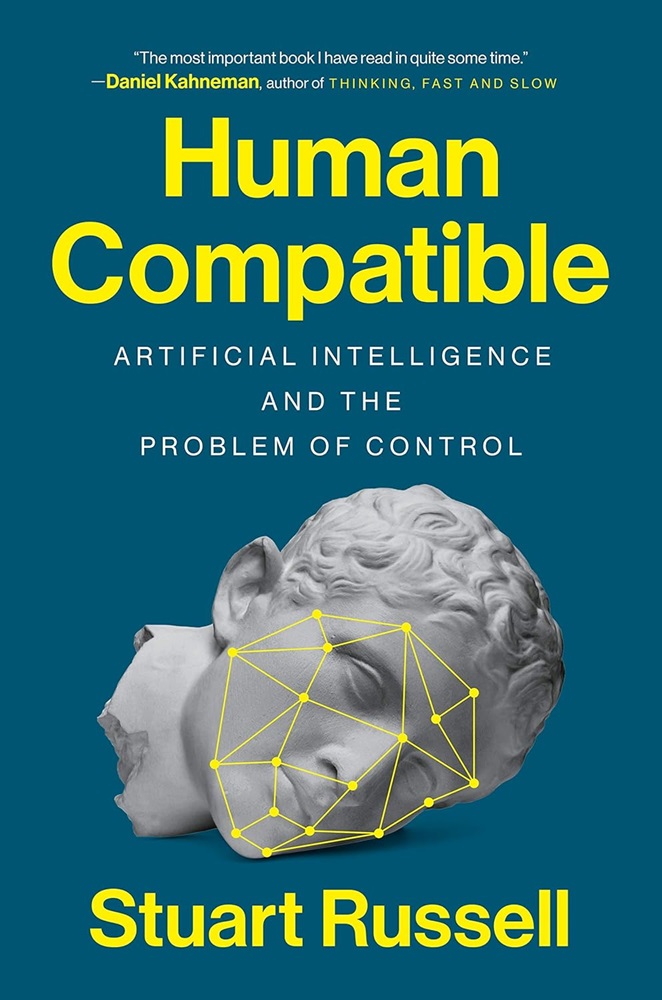

Human Compatible has been Reissued in the UK

30 Apr 2020

April 30th, 2020 was the UK publication date for the paperback edition of Stuart Russell’s book “Human Compatible: AI and the Problem of Control.” This is a reissue of his original hardback edition, published in October 2019, has been described as “the most important book on AI so far.”

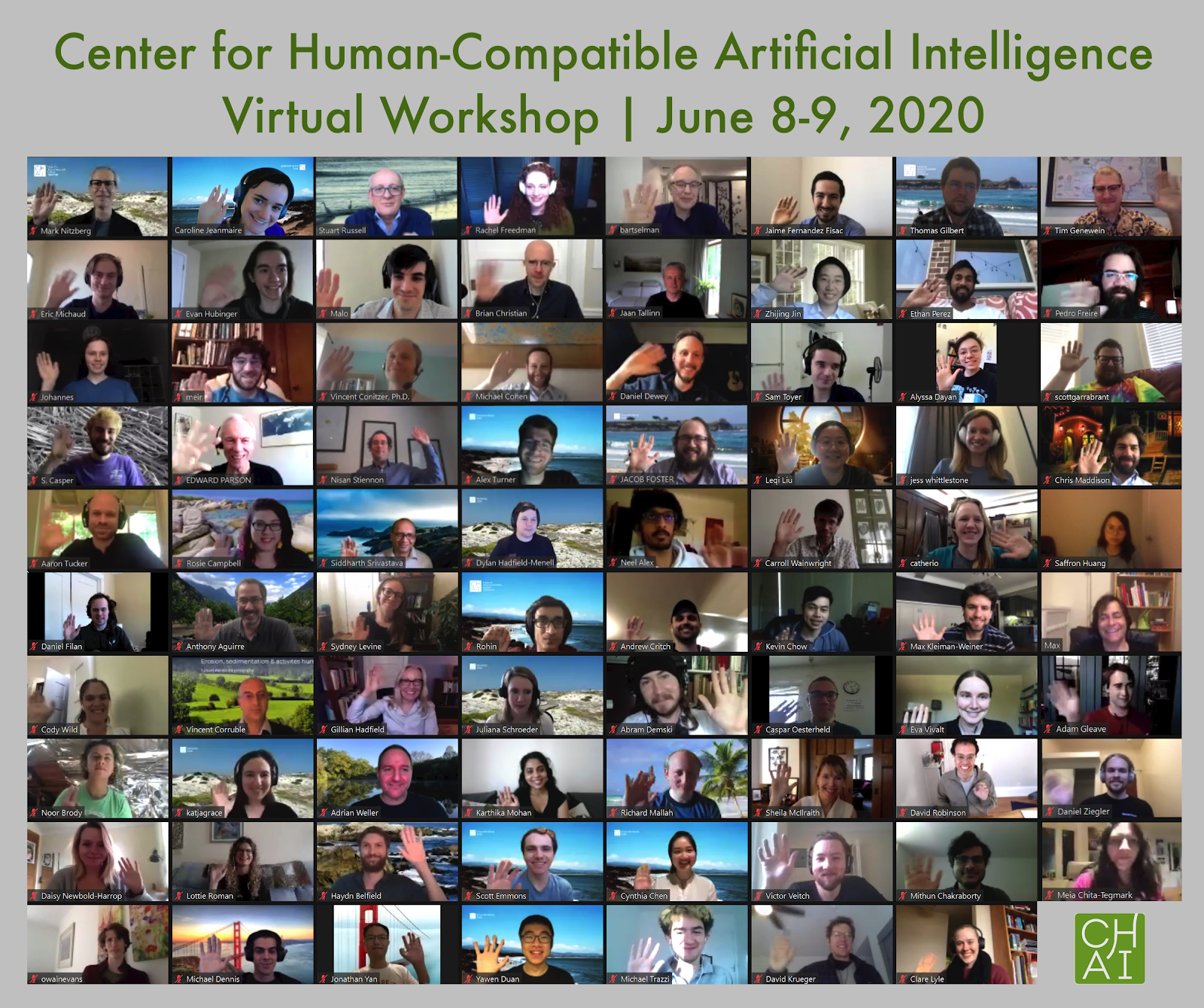

Professor Stuart Russell and Caroline Jeanmaire Organize Virtual Workshop Titled “AI Economic Futures”

19 Apr 2020

Professor Stuart Russell and Caroline Jeanmaire, Director of Strategic Research and Partnerships at CHAI, organized an inaugural virtual workshop in collaboration with the Global AI Council at the World Economic Forum.

Professor Anca Dragan Features on Podcast “Artificial Intelligence”

05 Mar 2020

Professor Anca Dragan was featured on the podcast “Artificial Intelligence” with Lex Fridman. In the episode, they focus on the difficulties of human-robot interaction during semi-autonomous driving.

“Hard Choices in Artificial Intelligence: Addressing Normative Uncertainty Through Sociotechnical Commitments” Accepted by AIES

21 Feb 2020

The AAAI/ACM Conference on AI, Ethics, and Society (AIES) 2020 accepted a paper, “Hard Choices in Artificial Intelligence: Addressing Normative Uncertainty through Sociotechnical Commitments,” coauthored by CHAI machine ethics researcher Thomas Gilbert.

Rohin Shah Writes Detailed Review of Public Work in AI Alignment

18 Jan 2020

CHAI researcher Rohin Shah wrote a detailed review of public work in AI alignment in 2019 on the AI Alignment Forum. The review features work on topics such as AI risk analysis, value learning, robustness, and field building.