News

Learning to Coordinate with Experts

07 Mar 2025

Khanh Nguyen, Benjamin Plaut, Tu Trinh, and Mohamad Danesh introduce a fundamental coordination problem called Learning to Yield and Request Control (YRC), where the objective is to learn a strategy that determines when to act autonomously and when to seek expert assistance. They build an open-source benchmark featuring diverse domains, propose a novel validation approach, and investigate the performance of various learning methods across diverse environments, yielding insights that can guide future research.

Computational Frameworks for Human Care

20 Feb 2025

Brian Christian, CHAI Affiliate, has published an article titled “Computational Frameworks for Human Care” in the most recent issue of Daedalus, the journal of the American Academy of Arts and Sciences. In it, Christian traces how AI alignment has progressed from simple reward mechanisms toward care-like relationships, revealing both the potential and limitations of machine caregiving while deepening our understanding of human care itself. The issue is titled “The Social Science of Caregiving” and was co-edited by CHAI Affiliate Alison Gopnik.

A Practical Definition of Political Neutrality for AI

04 Feb 2025

NEW: Our current research project to build political neutrality evaluations.

Getting By Goal Misgeneralization With a Little Help From a Mentor

25 Dec 2024

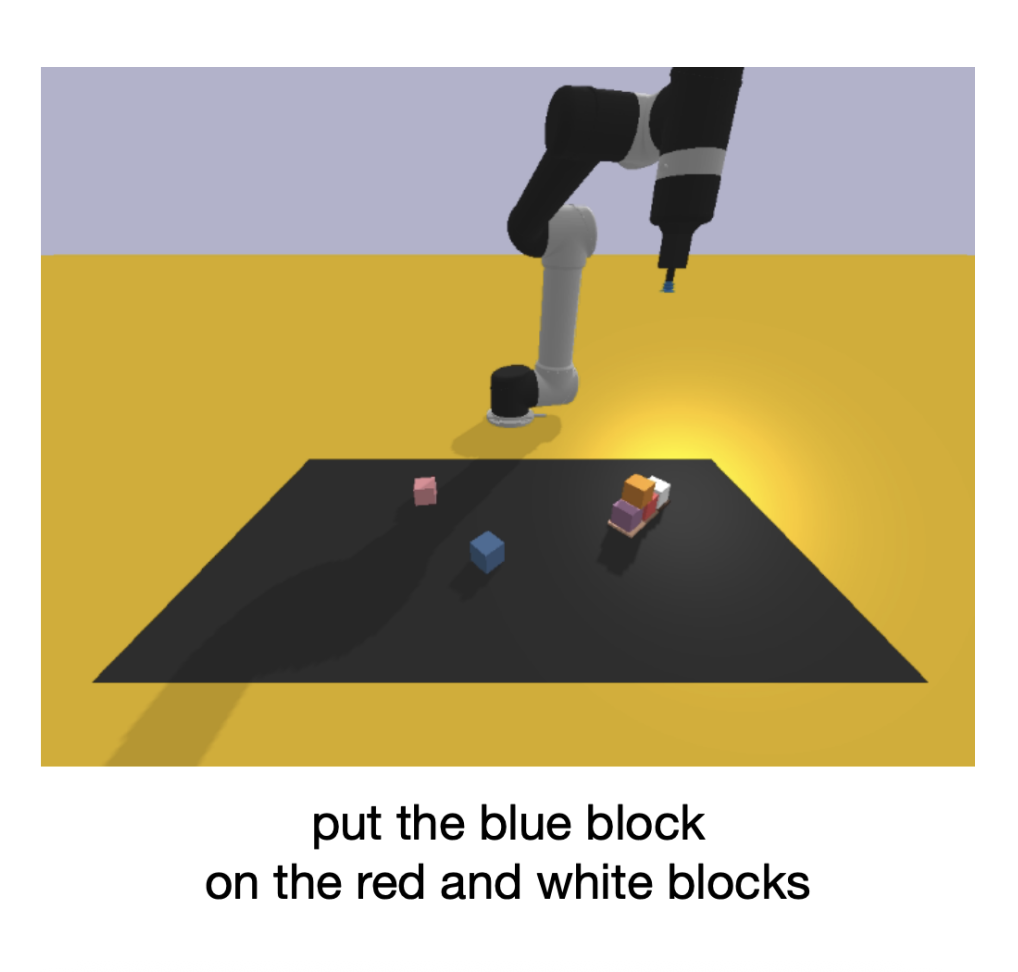

“Tu Trinh, Ben Plaut, Khanh Nguyen, and Mohamad Danesh wrote the paper, “Getting By Goal Misgeneralization With a Little Help From a Mentor.” This paper explores whether goal misgeneralization can be mitigated by allowing an agent to ask for help when it is uncertain. The answer is mostly yes, although our current methods have substantial weaknesses and there are lots of interesting avenues for future work.”Tu Trinh, Ben Plaut, Khanh Nguyen, and Mohamad Danesh wrote the paper, This paper explores whether goal misgeneralization can be mitigated by allowing an agent to ask for help when it is uncertain. The answer is mostly yes, although our current methods have substantial weaknesses and there are lots of interesting avenues for future work.

Linear Probe Penalties Reduce LLM Sycophancy

14 Dec 2024

Visiting ETH MsC student Henry Papadatos and supervising CHAI PhD student Rachel Freedman publish an article “Linear Probe Penalties Reduce LLM Sycophancy” at the NeurIPS SoLaR workshop. The paper demonstrates a generalizable methodology for reducing unwanted LLM behaviors that are not sufficiently disincentivized by RLHF fine-tuning

Rachel Freedman selected as inaugural Cooperative AI Fellow

30 Nov 2024

Rachel Freedman, PhD Student, has been selected as one of the fellows for Cooperative AI’s PhD Fellow Program.

Representative Social Choice: From Learning Theory to AI Alignment

12 Nov 2024

Tianyi Qiu, CHAI Intern, wrote this paper which was accepted by NeurIPS 2024 Pluralistic Alignment Workshop. Here is the link to the paper.

Getting By Goal Misgeneralization With a Little Help From a Mentor

10 Oct 2024

Khanh Nguyen, Mohamad Danesh, Ben Plaut, and Alina Trinh wrote this paper which was presented at Towards Safe & Trustworthy Agents Workshop at NeurIPS 2024.