News

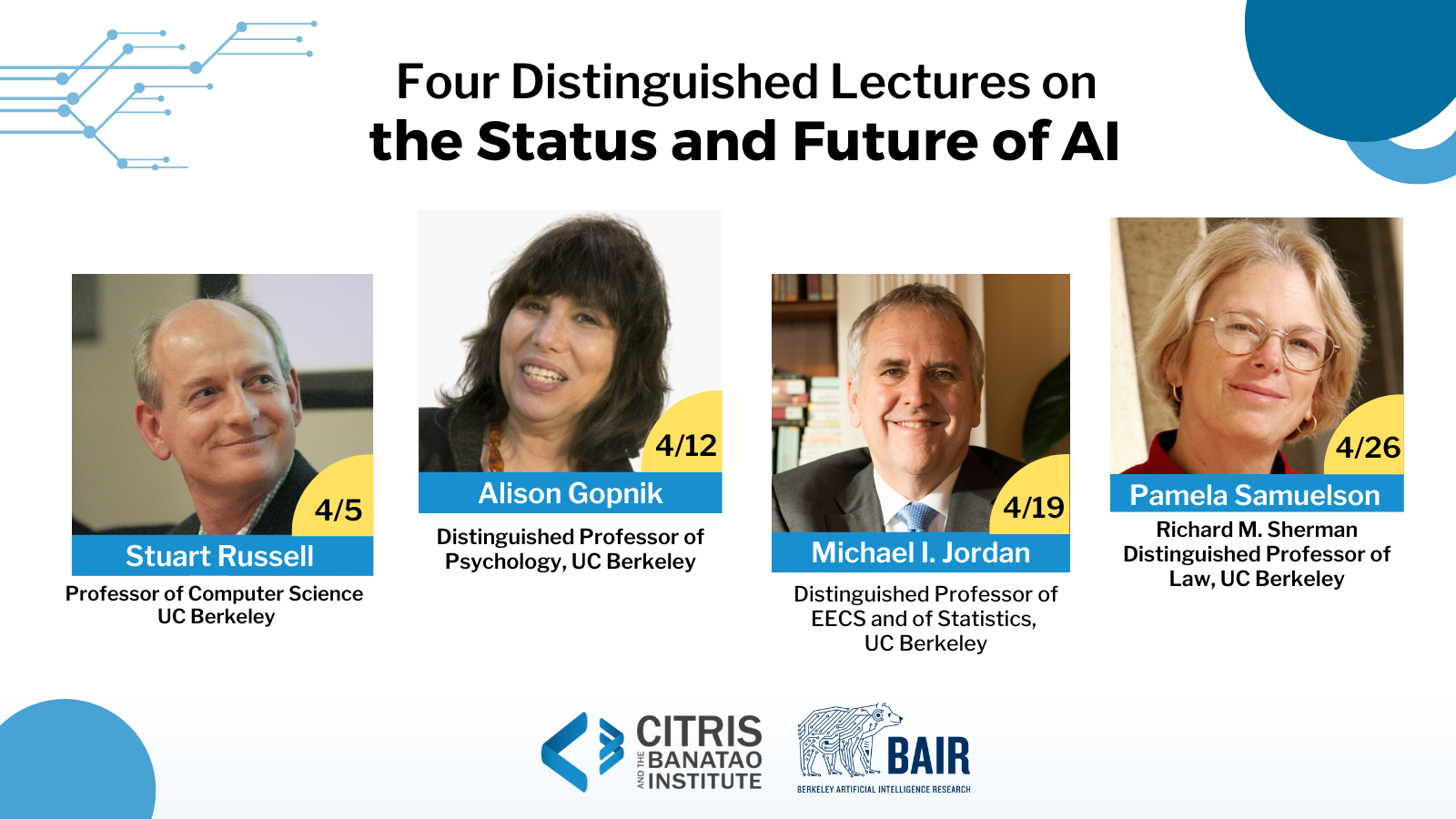

Distinguished Lectures on the Status and Future of Al

04 Apr 2023

Four distinguished lectures on the Status and Future of Al organised by CITRIS Research Exchange and BAIR will be livestreamed and held in person at CHAI.

Brief of Center for Democracy and Technology and 6 Technologies as Amici Curiae in Support of Respondent

17 Feb 2023

CHAI’s Jonathan Stray joined with Brandie Nonnecke from Berkeley’s CITRIS Policy lab, the Center for Democracy and Technology, and other scholars to file a brief for the Supreme Court in an upcoming case called “Gonzalez vs. Google” that asks whether “targeted recommendations” should be protected under the same law that protects the “publishing” of third party content.

Fairness and Sequential Decision Making: Limits, Lessons, and Opportunities

31 Jan 2023

As automated decision making and decision assistance systems become common in everyday life, research on the prevention or mitigation of potential harms that arise from decisions made by these systems has proliferated.

Inner and Outer Alignment Decompose One Hard Problem Into Two Extremely Hard Problems

03 Jan 2023

One prevalent alignment strategy is to 1) capture “what we want” in a loss function to a very high degree, 2) use that loss function to train the AI, and 3) get the AI to exclusively care about optimizing that objective.