News

Joe Halpern Becomes Moore Fellow at Cal Tech

20 Mar 2019

Joe Halpern has been accepted as a Moore Distinguished Scholar at Cal Tech’s Division of the Humanities and Social Sciences. You can read more here.

Michael Wellman Featured in Panel on the Future of AI

13 Mar 2019

Michael Wellman was featured on University of Michigan’s Panel on the Future of AI. You can find a video of the panel here.

Rohin Shah Publishes Blog Posts on Learning Preferences

14 Feb 2019

CHAI grad student Rohin Shah published two blog posts on his recent paper Preferences Implicit in the State of the World. The first was Learning Preferences by Looking at the World, published on the Berkeley AI Research (BAIR) Blog. The second blog post was an article of the same name that was published on the AI Alignment Forum. This article went into more of the details and background of the original paper.

Joe Halpern Elected to National Academy of Engineers

13 Feb 2019

CHAI Professor Joe Halpern received one of the highest honors in the field of engineering, joining the National Academy of Engineering for his outstanding contribution to “reasoning about knowledge, belief, and uncertainty and their applications to distributed computing and multiagent systems.” Read more here.

Daniel Filan Publishes Blog Post on Impact Measures

CHAI grad student Daniel Filan published a blog post entitled Test Cases for Impact Measures on the AI Alignment Forum.

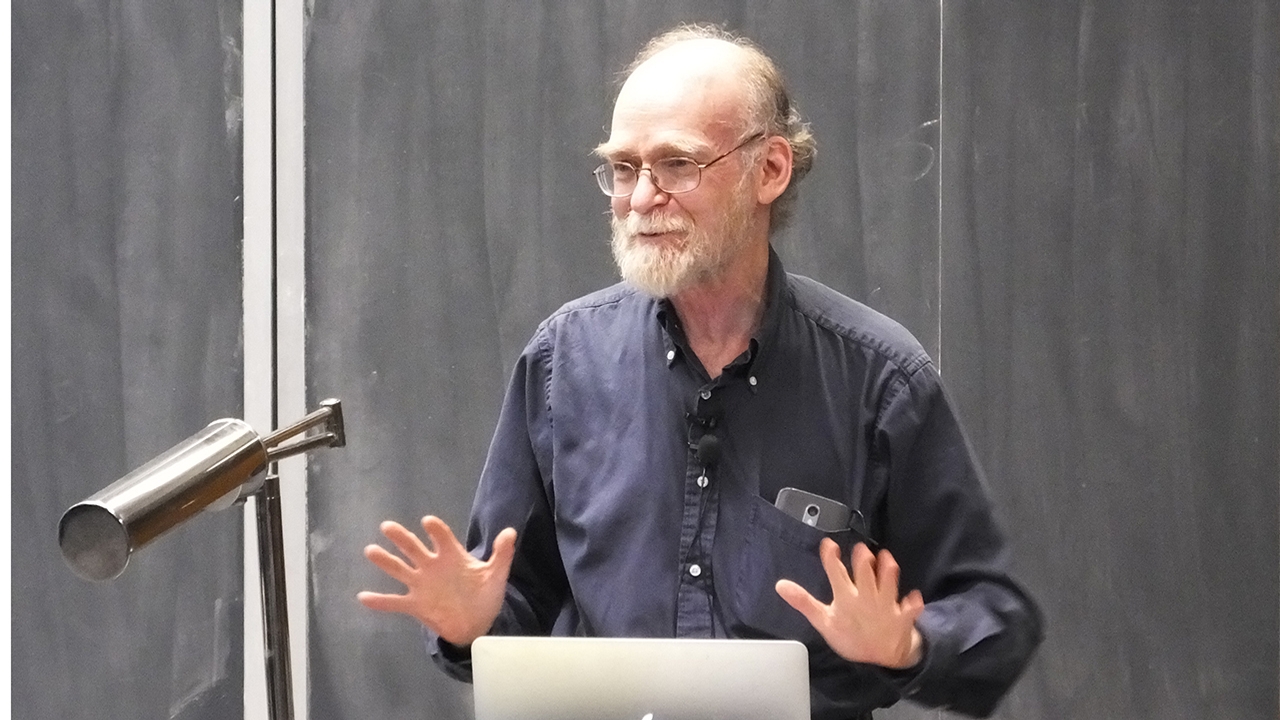

Stuart Russell Gives Invited Talk to Netherlands Ministry of Foreign Affairs

21 Jan 2019

Professor Stuart Russell gave a talk to the Netherlands Ministry of Foreign Affairs on the risks of autonomous weapons. Professor Russell has been involved in bringing awareness to the risks that autonomous weapons have, calling them the New Weapons of Mass Destruction due to their ability to scale with a small number of human operators.

Former CHAI Intern Wins AI Alignment Prize

20 Jan 2019

Alex Turner, a former CHAI intern, was announced as one of the two winners of the AI alignment prize for his paper on Penalizing Impact via Attainable Utility Preservation. The award is given as the prize of a competition for submitted papers that further understanding in the field of AI safety. More information can be found here.

Stuart Russell Receives AAAI Feignenbaum Prize

15 Jan 2019

The Association for the Advancement of Artificial Intelligence (AAAI) announced that CHAI PI Stuart Russell is the winner of the 2019 Feigenbaum Prize, and was awarded at the AAAI conference in Honolulu in January “in recognition of (his) high-impact contributions to the field of artificial intelligence through innovation and achievement in probabilistic knowledge representation, reasoning, and learning, including its application to global seismic monitoring for the Comprehensive Nuclear-Test-Ban Treaty.” The Feigenbaum Prize is awarded biennially to recognize and encourage outstanding Artificial Intelligence research advances that are made by using experimental methods of computer science.