News

International Conference on Learning Representations Accepts “Adversarial Policies: Attacking Deep Reinforcement Learning”

06 Dec 2019

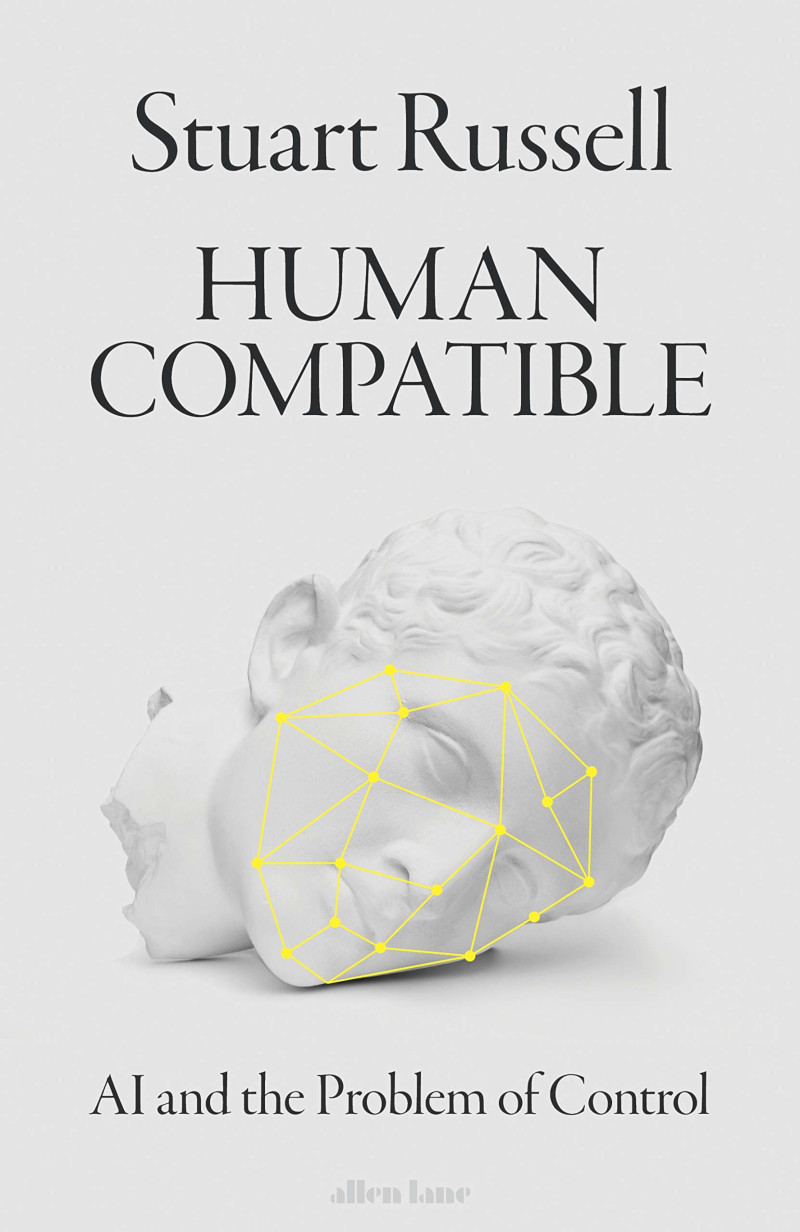

Adam Gleave, Michael Dennis, Neel Kant, Cody Wild, Sergey Levine, and Stuart Russell had a new paper, “Adversarial Policies: Attacking Deep Reinforcement Learning”, accepted by the International Conference on Learning Representations (ICLR).

Rohin Shah and Micah Carroll Publish “Collaborating with Humans Requires Understanding Them”

02 Nov 2019

CHAI PhD student Rohin Shah and intern Micah Carroll wrote a post on human-AI collaboration on the

Berkeley AI Research Blog.

Rohin Shah Professionalizes the Alignment Newsletter

28 Sep 2019

CHAI PhD student Rohin Shah’s Alignment Newsletter has grown from a handful of volunteers to a team of people paid to summarize content.

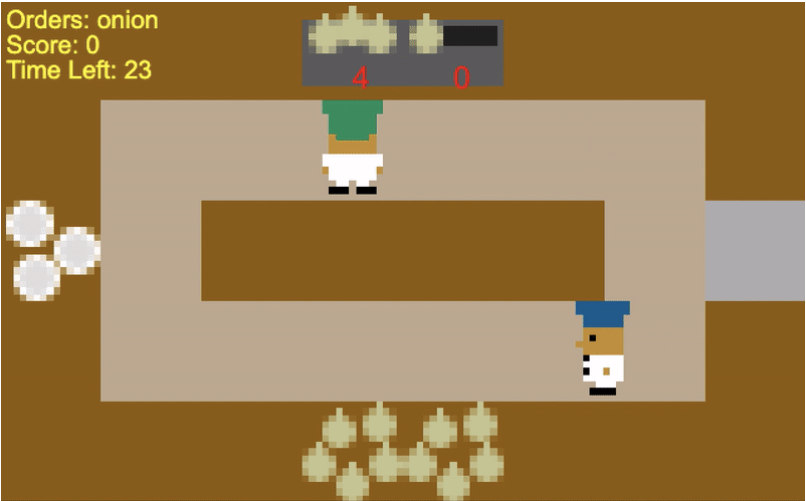

NeurIPS 2019 Accepts CHAI Researchers’ Paper “On the Utility of Learning about Humans for Human-AI Coordination”

The paper authored by Micah Carroll, Rohin Shah, Tom Griffiths, Pieter Abbeel, and Anca Dragan, along with two other researchers not affiliated with CHAI, was accepted to NeurIPS 2019. An ArXiv link for the paper will be available shortly.

Siddharth Srivastava Awarded NSF Grant on AI and the Future of Work

13 Sep 2019

Siddharth Srivastava, along with other faculty from Arizona State University, was awarded a grant as a part of the NSF’s Convergence Accelerator program. The project focuses on safe, adaptive AI systems/robots that enable workers to learn how to use them on the fly. The central question behind their research is: How can we train people to use adaptive AI systems, whose behavior and functionality is expected to change from day to day? Their approach uses self-explaining AI to enable on-the-fly training. You can read more about the project here.

Rohin Shah Publishes “Clarifying Some Key Hypotheses in AI Alignment” on the Alignment Forum

27 Aug 2019

CHAI PhD student Rohin Shah, along with Ben Cottier, pubished the blog post “Clarifying Some Key Hypotheses in AI Alignment” on the AI Alignment Forum. The post maps out different key and controversial hypotheses of the AI Alignemnt problem and how they relate to each other.

Siddharth Srivastava Publishes “Why Can’t You Do That, HAL? Explaining Unsolvability of Planning Tasks”

17 Aug 2019

CHAI PI Siddharth Srivastava, along with his co-authors Sarath Sreedharan, Rao Kambhampati, David Smith, published “Why Can’t You Do That, HAL? Explaining Unsolvability of Planning Tasks” in the 2019 International Joint Conference on Artificial Intelligence (IJCAI) proceedings. The paper discusses how, as anyone who has talked to a 3-year-old knows, explaining why something can’t be done can be harder than explaining a solution to a problem. The paper then goes into new work in having AI explain unsolvability.

Michael Wellman Gives Talk “Trend-Following Trading Strategies and Financial Market Stability” at ICML 2019

16 Aug 2019

CHAI PI Michael Wellman gave a talk at the ICML’s Workshop on AI and Finance on how one form of algorithmic (AI) trading strategy can affect financial market stability. The video for the talk can be found here.